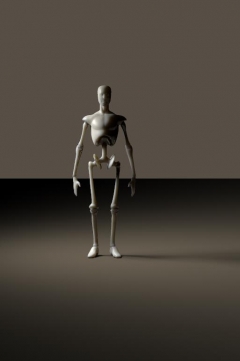

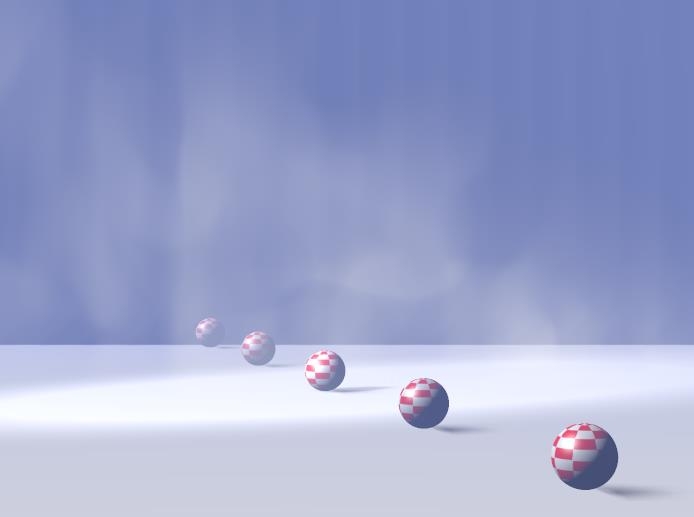

Poser lights have their shortcomings, when I want to use them as lamps in real life. Poser lights are extremely small, therefore they produce very hard shadows, and they lack the atmospheric scattering which is always present in real-life environments. And… real-life behavior takes lots of resources when rendering. All this can be compensated for.

Shadow Bias

Then we’ve got the magical Shadow Minimum Bias property, what does it do and why?

Well, technically it takes a shadow casting surface, shifts it a bit towards the light, calculates the shadows onto that uplifted surface, and then assigns those shadows onto the actual shadow casting surface in its original position.

The advantage comes when handling displacement maps and small scale surface detail. Without the bias, every detail has to be taken into account as it warps a tiny shadow onto the surface. Those shadows are quite unnatural, such minor irregularities don’t warp shadows at all. The light bends around them, and the scattering in the atmosphere will make the thin shadow fade away. Besides that, it’s an enormous job for the shadow calculations. With the bias, only details that rise (or sink) more than this value will be taken into account. This enhances the natural feel of the shadows and it saves processing effort as well.

The downside is: it creates an artifact as the shadows themselves are somewhat displaced relative to the objects. To a minor extend, this is acceptable but larger values produce notoriously incorrect results.

Actually, the default 0.8 is quite a lot already so in my opinion one should never exceed 1. On the other hand, 0 cracks the renderer so 0.000001 is the real minimum here and will make shadows from every surface detail. Perhaps 0.1 would be a nice setting.

Ambient Occlusion

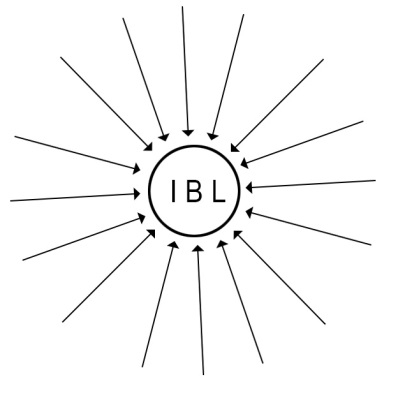

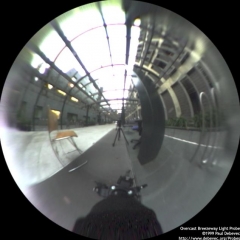

Direct lights warp direct shadows, either mapped or raytraced. Indirect and Image based Skydome lights generate an omnipresent ambient lighting which hardly warps shadows at all. But that is incorrect, as in my room the lighting level under my chair is much higher than that under my PC cabinet. Objects and surfaces close to each other hamper the spread of ambient lighting, they occlude each other from the ambient light.

In the early days of Poser, this Ambient Occlusion (or: AO) was dealt with as a material property, hence the Ambient_Occlusion node in the materials definition. Actually this is weird, as AO is not the result of a material but the result of the proximity of objects or of object elements (hence: the shape of an object). Above that, AO is mainly relevant to Diffuse IBL lights which generate the shadow-less omnipresent ambience.

More on that later.

Light arithmetic

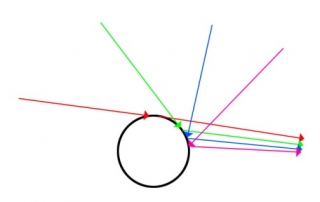

In real life, when light shines on my retina or on the backplane of my camera, one light shining at a surface fires some percentage of the light-sensitive elements. A second light then fires the same percentage, of the remaining elements. As a result the non-fired elements reduces to zero when adding lights, and the captured lighting level increases to 100%. Photoshop (or any other image handling program) does a similar thing when adding layers using the Screen blending mode.

Poser however just multiplies and adds up. A 50% white light on a 50% white object results in a 50% x 50% = 25% lighting level. A 100% white light plainly lighting a 100% white object results in 100% lighting level. Two of those 25% lights make a 50% lighting level, or in the second case: a 2x 100% = 200% lighting level in the render. And this latter will get clipped (back to 100%) when establishing the final output, resulting in overlit areas. As in the first case, five lights on a grey object will cause overlighting too.

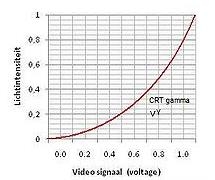

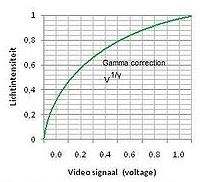

Things will be slightly different when Gamma Correction is applied. Then, first, all lights and objects will get anti-gamma-corrected (darkened), let’s say the 50% reads as 20% then, but 100% stays at 100%. In the latter case, nothing changes: one light on a white surface makes 100%, two lights make an overlit area in the render. The first case however produces a 20% x 20% = 4% lit area, two lights make 8% (Poser still just adds up), and now that intermediate result is Gamma Corrected to say 35% instead of the 50% without GC.

But even 24 lights add up to 24 x 4% = 96% which gets Gamma Corrected to 98% in the final result, in other words: Gamma Correction prevents – to some extent – severe overlighting. Actually it dampens all effects of lighting and shadowing.

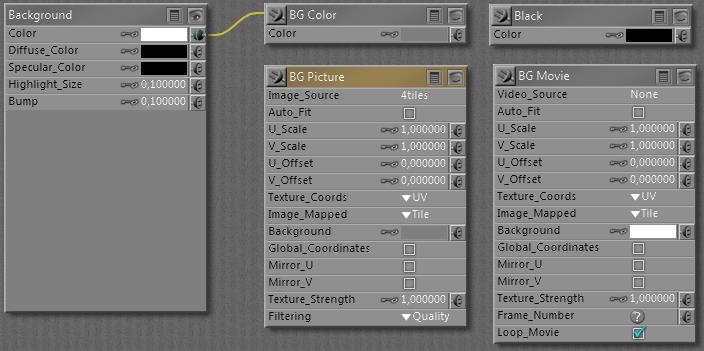

Light materials

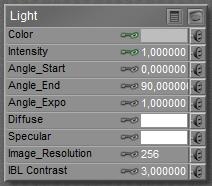

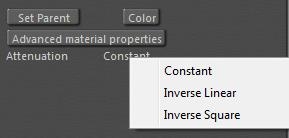

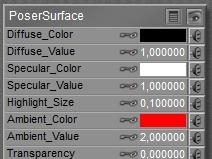

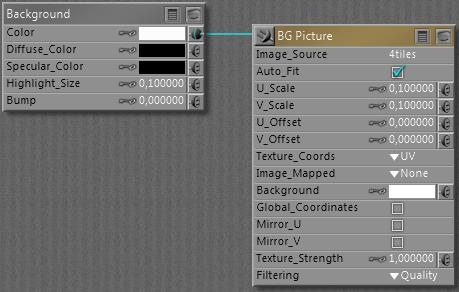

Poser direct lights appear in the Material Room as well, having Color, Intensity, Diffuse and Specular as the main parameters. Other parameters mainly serve legacy situations and can be discarded.

Color and Intensity team up (multiply) to fill the Preview, and give me light on my work. While rendering, the Diffuse and Specular channels kick in as well, and multiply with the Color x Intensity just mentioned.

This implies that blacking out the Diffuse make it turned off for diffuse lighting in the render, while still lighting the preview, and making specular in the render as well. This is great when working with IDL lighting which caters for all diffuse lighting itself, but fails to light the preview and does not produce specularity either. Similarly I can produce lights that make diffuse light only, with the Specular channel blacked out. Or lights which contribute only to the preview, having both Diffuse and Specular blacked out.

I also can have strong lights in the preview but have them dimmed in the render, by having reduced intensities (grays) in the diffuse and specular channels. And I can confuse myself a lot, by using some white hue in the preview but using some color while rendering. I never do that, though.